By Valarie Waswa

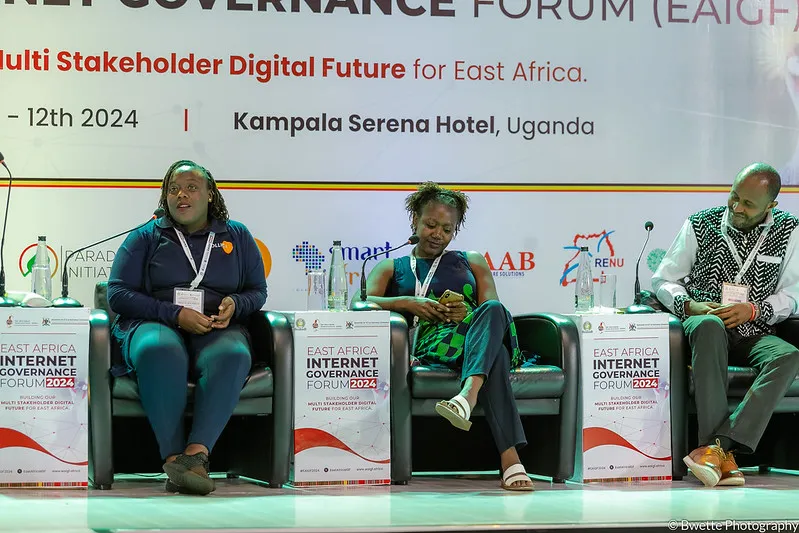

On Day 2 of the East African Internet Governance Forum 2024, a key breakout panel discussion titled “Inclusive AI Governance: Enhancing Human Rights and Ethical Development in East Africa” took centre stage.

Moderated by Muthuri Kathure, the panel featured experts in the field, including Rachel Magege, Louis Gitinywa, Angela Minayo, and Tricia Gloria Nabaye.

Together, they unpacked the complexities of AI governance, the intersection with human rights, and the broader ethical implications for East Africa.

Navigating AI and Human Rights

The discussion kicked off with an exploration of the existing frameworks governing AI and human rights, notably referencing the UN Guiding Principles on Business & Human Rights.

These principles, Angela emphasized, provide a roadmap for AI companies to ensure their innovations align with human rights standards. She highlighted the importance of conducting due diligence, stressing that companies must integrate mechanisms such as AI sandboxes to safely test and experiment with technologies before rolling them out.

A key concern was raised about the need for effective complaint-handling and complaint-handling mechanisms. With AI becoming more embedded in our lives, ensuring that there are efficient ways for users to report issues, particularly cross-border ones in East Africa, is essential. Jurisdictional challenges, especially when AI products transcend borders, need to be addressed in these mechanisms.

The Dual Nature of AI

One of the most thought-provoking segments came when the conversation shifted to the risks posed by AI, especially in fueling misinformation and disinformation.

AI, as highlighted by the panel, is a double-edged sword. While it can advance societal goals, it can also be misused. For example, Angela pointed out the recent disinformation campaigns in Kenya, where some MPs falsely claimed that AI-generated images were used to depict the Maandamano protests inaccurately.

The discussion then delved into accountability. Tricia was asked who should be held responsible when AI technologies cause harm or violate rights. Her response pointed squarely at Big Tech.

She argued that these corporations wield significant influence and should bear the brunt of accountability. However, she also advocated for the localization of AI solutions, suggesting that developing homegrown technologies would better serve the region.

Tricia emphasized the need for governments to establish regulatory bodies that can hold not only corporations but also governments accountable. She further stressed the importance of whistleblowers in maintaining transparency.

A particularly striking point Tricia made was about Uganda’s current landscape. While there are no active cases involving AI violations, she called on end-users to engage with AI technologies more responsibly. Her call for greater consumer awareness underscored the importance of a rights-based approach to AI governance.

Who Controls the Purse Strings?

Louis Gitinywa, representing Rwanda, brought the conversation to a critical point: who controls the resources in the AI ecosystem? While Rwanda has made strides with its National AI Strategy, the country remains largely a consumer of AI products rather than a producer.

Louis pointed out the challenge posed by the fact that the major players in AI are large multinational tech companies whose financial power eclipses the GDPs of entire nations in the region. These companies, he argued, are not only hard to regulate but also hold disproportionate influence over the development of AI policies.

The conversation on AI awareness was equally enlightening. Angela responded to a question on whether East African citizens are sufficiently informed about AI and its implications, particularly around data protection. She made an interesting point: while innovation is a form of expression—a fundamental human right—any regulation should pass the three-part test: legitimacy, proportionality, and necessity.

She cautioned against rushing to regulate AI without fully understanding its nuances, emphasizing that a one-size-fits-all approach, especially one based on European standards, would not work for East Africa.

Instead, the region needs context-specific policies that reflect its unique governance structures and human rights challenges.

Ethics vs. Human Rights in AI Governance

The ethical dimension of AI governance also featured prominently. Louis noted that ethical considerations must be infused into AI technologies from the design stage.

However, Angela offered a counterpoint: while ethics can be subjective, varying from one country or culture to another, human rights are universal. She advocated for using human rights as the benchmark for AI governance across East Africa, ensuring that AI technologies do not infringe on these fundamental rights.

To highlight the positive social impacts of AI, Louis shared Rwanda’s success story in using drones for healthcare purposes. Since 2016, the country has deployed AI-powered drones to deliver blood and medicines to remote areas, a clear example of how AI can be harnessed for social good.

On the flip side, Tricia provided a sobering reminder that not all East African countries enjoy the same democratic freedoms, which poses challenges to crafting a regional AI governance framework. For instance, she cited Uganda’s digital number plates and extensive surveillance systems, warning against using AI to entrench authoritarianism.

The Debate on a Unified AI Governance Framework

As the session opened up to audience questions, a critical debate emerged: can East Africa develop a unified AI governance framework? While many saw the potential for such a framework to enhance the region’s collective bargaining power on the global stage, others were sceptical.

The region’s diverse socio-economic realities, political landscapes, and governance structures make it difficult to standardize AI regulations.

One panellist noted the political dimension of technology, particularly around data control, as a key barrier. As each country seeks to maintain sovereignty over its data, developing a common regulatory framework becomes even more challenging.

Angela added that the judiciary’s capacity to interpret and enforce AI regulations also differs across the region, complicating efforts to harmonize laws. For instance, Kenya has a stringent approach to cybercrime, which may not align with the practices in other countries.

Another panellist remarked that solving national-level issues around cybersecurity and data protection is a prerequisite to tackling them at the regional level.

Spotlighting AI’s Benefits

The discussion also touched on the need to shift the narrative around AI from fear to opportunity. A question from the floor asked why the discourse often focuses on the risks, rather than spotlighting the benefits of AI.

Panelists agreed that while AI does come with risks, it also holds immense potential for improving livelihoods in the region. Building local AI capacity, integrating AI into education systems, and supporting African tech entrepreneurs were identified as key steps to fostering a more positive AI ecosystem.

A powerful comment from the floor underscored the lack of trust in local innovations. The sentiment that African AI products are inferior to those from outside the continent persists, and without adequate support for local developers, Africa will remain a consumer rather than a creator of AI technologies.

Capacity Building for Enforcement

I took the opportunity to ask the panel about capacity building for enforcement bodies, such as the judiciary, to handle AI-related violations. Angela responded by highlighting a project she worked on with Article 19 in Kenya that provided training to judicial officers.

However, she acknowledged the sustainability challenge, as such projects are often funding-dependent. She urged stakeholders to explore more sustainable solutions to capacity building, particularly as the need for expertise in AI-related legal cases continues to grow.

Further, the panel discussed the importance of integrating AI into formal education systems, not just at the higher levels but from kindergarten onwards. AI literacy, they argued, needs to be embedded into the curriculum to prepare future generations for a digital future.

What Unites Us?

In a follow-up question, I challenged the panel on the notion that ethical differences prevent the formation of a unified AI governance framework in East Africa.

I argued that what unites us as East Africans is greater than what divides us. So, what compromises could be made to create a middle ground for regional AI governance?

The response was mixed. While there was agreement that a middle ground could be found, the political and economic realities of each country still present significant barriers.

As one panellist noted, technology is inherently political, and countries are reluctant to cede control, especially over data and AI development.

Closing Remarks

As the session came to a close, the panellists left us with powerful parting words. One emphasized the need for civil society organizations (CSOs) to step up and hold legislators accountable, especially in pushing for legal reforms around AI.

Another underscored the importance of involving youth in AI regulation, noting that in Uganda, even though 70 per cent of the population is young, they are grossly underrepresented in AI decision-making processes.

Finally, the panellists called for home-grown solutions to AI governance, with the overarching message being clear: regulate, but don’t overregulate.

Ms Valarie Waswa, Legal Fellow – KICTANet

![]()